In a previous article we generated a 720p DVI video signal from an FPGA, producing a 1280×720 pixel test pattern on-screen. Today we're going to revisit that and reach another intermediate milestone on the path to a useful video controller: a component that can receive pixel data from a RAM chip and make it available to the picture generator.

The Story So Far

In our previous installment we learned that video signals are built by emitting one pixel at a time from top left to bottom right (raster), interspersed with blanking periods that mark the end of each line and of each frame by halting emitting pixel data for some period and activating synchronization signals.

We used Verilog to implement a digital logic circuit to produce the necessary signals with the appropriate timings to be understood by a DVI-compatible or HDMI-compatible display as a 720p picture, at 1280 pixels wide and 720 pixels tall.

That Verilog module serves as the timing generator component of our video controller.

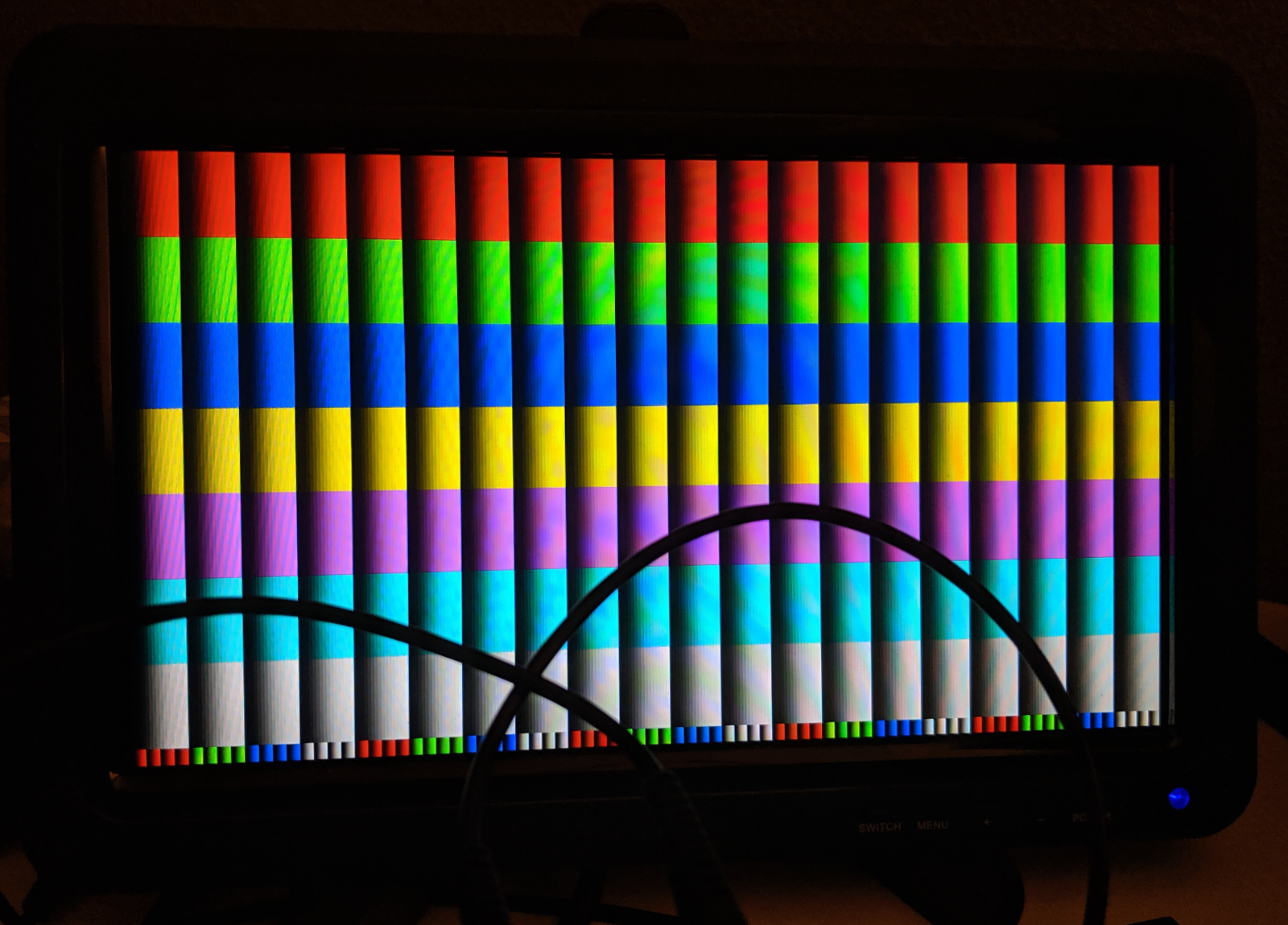

We also implemented a placeholder picture generator in the form of a test pattern:

A test pattern like this avoids the need to interact with any memory because the color of a pixel can be calculated on the fly directly from its X and Y coordinates.

However, a practical display controller ought to be able to display any picture presented (in a suitable format) by software running on an associated CPU. In order to achieve that, we need some sort of memory to store the graphics to display.

Working with RAM

Random Access Memory, or RAM, comes in a number of different varieties. The simplest to work with is static RAM (SRAM), which uses transistor-based latches to store data. Static RAM is convenient because we can freely access any byte in any order, and once we've written some data at a particular address we can be confident that it will remain there as long as the RAM chip remains powered.

Static RAM is commonly used for small memories like the registers and cache inside a CPU. Historically it was also used as main system memory for some microcomputers, but due to the relatively high cost per bit of static RAM it is not cost-effective for the large RAM sizes we tend to expect these days. A single framebuffer for a 1280×720 image, assuming two bytes per pixel, requires approximately 1.76 megabytes of RAM, with a 2 megabyte SRAM starting at $3.50 for a single chip on DigiKey as I write this. I'd prefer to have enough video RAM for at least two framebuffers (double buffering), which would mean either using multiple SRAMs of that size or using a larger single one.

Static RAM is also relatively slow for our intended application. Access times of typical SRAM chips I can find on DigiKey are between 45 and 70 nanoseconds, which is considerably longer than the 13.5 nanosecond pixel clock period needed for a 720p signal.

Main system memory and video memory in today's systems tends to be a different variety of RAM called dynamic RAM (DRAM). DRAM is instead constructed as a matrix of capacitors whose charge (or lack thereof) represents whether or not a particular memory bit is set.

Charged capacitors will gradually leak their charge if left alone, so DRAM does not guarantee that data you've written will remain available as long as power is present. Instead, the device using the DRAM is responsible for refreshing all of the memory locations periodically, by reading the current value and then writing it back again. During the refresh period, the DRAM is busy and cannot be used to read and write other data.

DRAM also requires a particular discipline for reading and writing: reading a bit of memory from the DRAM discharges the associated capacitor, effectively destroying the value. To accommodate that, a DRAM chip senses the value in some subset of bits all at once and latches them into temporary storage that behaves more like a static RAM, thus allowing reading and writing to that subset of addresses before re-charging all of those bits at once. This process is called "opening" and "closing" rows of bits, and in order to access a particular byte of memory we must first open the row containing that byte.

The upshot of all of this is that we cannot assume that we'll be able to read data from a dynamic RAM at a steady rate. If we're accessing a set of bytes that all belong to the same row then we can open that row once and access all of them at once, but if we need data across multiple rows then that'll take longer because we'll need to spend time closing and opening rows. We will also need to periodically interrupt our ongoing processes to deal with refreshing.

Along with the book-keeping tasks, for our video controller to be useful it must also be possible to write to the video memory. Typical RAM modules can't support both reading and writing at the same time (that requires a more expensive dual-port RAM), so we will also need to periodically stop reading current pixel data in order to deal with incoming requests to modify the pixel data in memory.

These characteristics are quite contrary to the requirements of our picture generator module. During the active display periods it needs to be able to reliably acquire one pixel's worth of data (two bytes) every pixel clock cycle, which means every 13.5 nanoseconds. If we were trying to read pixel data directly from RAM, we'd need a RAM that can reliably produce one byte of data every 6.75 nanoseconds, without any pauses for refreshing, row switching, or writing. That's a pretty tall order!

The picture generator doesn't need a constant stream of pixel data from memory, though: after every visible row there is a break of 4,995 nanoseconds for the horizontal blanking period, and then between frames there is a break of 668,250 nanoseconds for the horizontal blanking period. If we consider that each entire frame takes 16.7 microseconds (16,700,000 nanoseconds) then on average we have just over 18 nanoseconds to produce the data for each pixel.

That then raises a question: is there some way we can smooth out the interruptions caused by row switching and refreshing by reading from RAM as fast as possible, regardless of whether the picture generator is currently actively consuming pixels?

Decoupling with an Asynchronous FIFO

A FIFO is a queue data structure where values are read out in the same order they are added: the abbreviation is short for "first in, first out". A FIFO queue is a typical solution to the problem of a producer and a consumer that are both operating at independent (and possibly variable) speeds.

A FIFO queue serves as a buffer that can store values that have been generated by the producer but not yet used by the consumer, thus allowing the producer to temporarily run faster than the consumer and the consumer to then "catch up" when it's ready, without losing any values.

As long as there is space left in the queue's buffer, the producer can keep working. If the buffer fills up, the producer must stop work and wait for the consumer to process one or more values before continuing.

In the video controller system, the component that is reading from DRAM can play the role of producer. It will read sequential pixel data from the memory as fast as it can, pausing briefly where necessary to switch rows or perform a refresh, and add them to a queue.

The picture generator can then act as the consumer: for every pixel clock in the active picture area, it will take one pixel's worth of data from the queue and transmit it. During the blanking periods, it will not consume values from the queue at all, allowing the producer to recover from time lost due to bookkeeping tasks and ensure there's always at least one more pixel's worth of data in the queue when the picture generator needs it.

Below is a visualization of the process of producing an image frame with a producer feeding pixel data to a consumer using a FIFO queue with a buffer size of 128 pixel values. (The visualization starts paused. Click or touch the area below, or focus it and press the space bar, to make it start running)

At the top of the area above is a visualization of the contents of the 128-element buffer used for the FIFO queue. The queue is implemented using a circular buffer, and so the portion between the consumer pointer (shown under the buffer, labelled "C") and the producer pointer (shown above the buffer, labelled "P") contains the current values in the queue, and the remainder of the buffer at a particular instant (shown as dark grey) is unused.

Under the queue visualization is a representation of the screen we're writing to. Normally the scan rate would be so fast that the raster would not be visible, but I've slowed it down considerably here so we can see how the picture generator is reading color values from the buffer at the consumer pointer.

The queue is "full" if all 128 of the buffer elements are occupied, and "empty" if none of them are occupied. Because the picture generator needs to consume values at a constant rate during the active picture periods, our goal is to ensure that the buffer never becomes empty.

In order to make the visualization easier to see, it represents building only a 320×240 pixel image, which means a much slower pixel clock rate than we'd see for 720p. To compensate, I've also lowered the effective clock rate for the memory (the producer) so that it doesn't get an unfair advantage compared to how things might behave for a 720p signal.

Notice that the producer marker moves rightwards in a jerky fashion, simulating pauses to do bookkeeping work like row switches and refreshes. The consumer marker, on the other hand, moves rightwards at a steady rate during the active picture area, and so the producer gradually falls behind the consumer, with the number of active queue elements gradually shrinking.

It's only when the raster reaches the end of a scan line, during the horizontal blanking interval, that the producer gets an opportunity to catch up. The producer can keep appending new items to the queue as fast as it can until the buffer is full, at which point it stops producing new values until the consumer begins reading again, during the next scanline.

The simulation is also representing another characteristic of dynamic RAM that we didn't discuss yet: DRAM chips prefer to produce data in bursts, where the reader chooses a start address and then reads a number of subsequent consecutive addresses within the same row without the need to start a whole new request. That's a helpful capabililty because starting a new read has column address strobe (CAS) latency, which is a delay between sending the read request and the requested data becoming available. The simulation represents this by showing the producer writing items in chunks of several items in succession, and waiting until there's at least enough room in the buffer for one burst's worth of data before reading it.

The FIFO queue serves to decouple the producer and consumer, so that as long as on average the producer is running faster than the consumer the consumer can assume new pixel values will always be available immediately when needed, even though the producer isn't able to guarantee a sustained data rate.

FIFOs in Digital Logic

So far we've been discussing FIFO queues in theory, but I think it's well past time to talk about some practice.

For digital logic designs deployed into an FPGA, we typically employ sequential logic where the system is presented with a clock signal rising and falling at a predictable rate and uses the clock signal to synchronize the process of computing new values for registers by making calculations based on the values decided at the previous clock edge.

By taking all actions in response to the same clock, we can be sure that the result will (as long as the clock isn't running too fast) always be consistent: we can ensure that we're not trying to read a register value at the same time as writing it, which would risk an undefined result (due to metastability).

The problem with our idea of running our memory controller module faster than our pixel clock is that this means the system now has multiple clock signals: the pixel clock running at 74.25MHz and the memory clock running at, for example, 100MHz.

In digital logic design these modules are said to be asynchronous from one another, and so if we intend to use a FIFO queue between them then we need a special variant of FIFO known as an asynchronous FIFO, which uses a separate clock for the producer and consumer sides and must take special efforts to avoid internal metastability when values cross between the two sides.

Clock-domain Crossing

The general problem of communicating between two parts of a circuit that use different clocks is called clock-domain crossing. A clock domain is all of the synchronous logic elements that respond to a particular clock. Different clocks may have different frequencies and may be out of phase, and so synchronous logic elements in one clock domain may be in the middle of transitioning between logic levels at the arrival of a clock edge in another clock domain.

A typical approach to send one bit of information between two clock domains is to pass the value through three registers (flip-flops), with one in the clock domain of the source and the other two in the clock domain of the destination. The following is a Verilog representation of that process:

module crossdomain ( input reset, input clk, input data_in, output reg data_out ); reg [1:0] data_tmp; always @(posedge clk) begin if (reset) begin {data_out, data_tmp} <= 0; end else begin {data_out, data_tmp} <= {data_tmp, data_in}; end end endmodule

In the above module, data_in is assumed to be the output of a register that

is updated in a different clock domain than clk. Every positive edge of

clk, the higher bit of data_tmp is copied into the output register,

the lower bit of data_tmp is copied into the higher bit, and data_in is

sampled and its value written into the lower bit of data_tmp.

If data_in is sampled during a transition then it could read either as its

old value or its new value; in case it reads as the old value, notice of the

change will be delayed by one cycle of clk.

This risk of delay comes in return from removing the risk of metastability, but

has an interesting characteristic: data_out will only show a change if

data_in remains stable long enough for this always block to sample it:

if data_in is updated at a faster clock rate than clk and it only changes

for one clock pulse then the other clock domain may not see the brief change

at all. This technique is therefore only suitable for ongoing signals, and not

for momentary pulses.

The freedom from metastability also relies on the fact that only a single bit

is being sampled: if the above were extended so that all of the data signals

and registers were four bits wide, and if data_in were sampled during

a transition from 0b1111 to 0b0000, each bit may appear to have a different

logic level depending on how far through the voltage transition it has got,

which might cause data_out to show an entirely different value that is

neither the old nor the new value, such as 0b1011.

With this technique in our back pocket, let's talk about what building blocks we need to represent an asynchronous FIFO in digital logic.

The Makings of an Asynchronous FIFO

From what we've seen so far, it seems that the building blocks for an async FIFO include:

Two separate clock signals: one for the writer and one for the reader.

A buffer: in an FPGA, this can be implemented with an internal dual-port RAM inside the FPGA itself, allowing access from both clock domains as long as they don't both try to access the same element concurrently.

A write pointer that indicates the slot in the buffer that should be used for the next value written, incremented on each write. This will be a multi-bit register belonging to the write clock domain.

A read pointer that indicates the slot in the buffer that holds the current value to read, incremented after each read. This will be a multi-bit register belonging to the read clock domain.

A "can write" signal which is asserted when there's at least one unused slot in the buffer where a new value could be written. This would be updated in the write clock domain.

A "can read" signal which is asserted when there's at least one value in the buffer that can be read. This would be updated in the read clock domain.

At first glance, the above list looks promising because everything except the buffer is described as belonging to only a single clock domain, and due to the FIFO discipline each element in the buffer is either active (accessed only by the reader) or inactive (accessed only by the writer, as part of extending the active area).

However, a problem becomes apparent when we consider what we need to implement those "can write" and "can read" signals:

"Can write" is true if and only if the write pointer is less than the read pointer.

"Can read" is true if and only if the write pointer is greater than the read pointer.

The write pointer and the read pointer are in separate clock domains, so they are likely to update asynchronously from one another, which may cause one or both of these signals to temporarily show an incorrect answer during a transition.

To solve this, it seems that we need to send a copy of the write pointer to the read clock domain and a copy of the read pointer to the write clock domain, thus allowing us to compute both of these flags safely. Because we know that both pointers only ever increment, it's okay in practice if these copies are delayed: the receiving domain may see a lower value for one or two clocks longer than strictly necessary, but that will cause the corresponding flag to be "pessimistic", preventing the opposing pointer from incrementing too far.

So how can we transmit the pointers between clock domains?

Safely Transmitting Multi-bit Values: Grey Code

Earlier on we saved in our back pockets a tool for sending values safely between clock domains: passing a bit through a series of registers. However, a key constraint of that technique is that it can safely represent only one bit changing per clock, because otherwise multiple values changing together may not all transition between logic levels at the same instant.

This presents a problem for sending our read and write pointers, because each one is an integer represented by multiple bits. For example, if our buffer had eight entries then our pointers would each be three bits long, counting in binary like this:

0:

0b0001:

0b0012:

0b0103:

0b011...

Changing from 1 to 2 implies both the first and second bits changing at

the same time. If we're unlucky in our timing, our domain-crossing module above

might sample these at a point where only one has updated, yielding an incorrect

value like 0b0000 or 0b0011. The reciever would then calculate its flag

incorrectly, potentially causing the "can write" pointer to be asserted at

a wrong time and then corrupting the queue.

Fortunately, there is an answer to this quandry courtesy of a man called Frank Grey, who in the 1940s devised a different encoding of binary numbers which we now call Grey Code.

Grey code defines a different arrangement of bits to represent each integer, arranged so that incrementing the source value by one always changes only one bit in the encoded value. The full Grey Code subsitution for three-bit values is enumerated in the following table:

| Decimal | Binary | Grey Code |

| 0 | 0b000 | 000 |

| 1 | 0b001 | 001 |

| 2 | 0b010 | 011 |

| 3 | 0b011 | 010 |

| 4 | 0b100 | 110 |

| 5 | 0b101 | 111 |

| 6 | 0b110 | 101 |

| 7 | 0b111 | 100 |

If we were to transmit our transition from 1 to 2 in Grey Code, only the

first bit needs to change and so even if the receiver samples the signals

during the transition the resulting values can only be either 001 or 011,

which restores the characteristic of replacing metastability with the risk

of a one-clock delay if our timing is unlucky.

Although I presented Grey Code as a lookup table above, there's actually a relatively-straightforward bitwise calculation to produce it:

grey(x) = (x >> 1) ^ x

The above equation is using C and Verilog notation where >> 1 means to

shift bits one to the right and ^ means exclusive OR.

If we use this equation to produce the Grey-coded eqivalent of our pointers each time they change, then we can safely send them to the opposite clock domain, from which we can compute the "can read" and "can write" signals. But how do we implement less than and greater than of Grey code values?

I actually cheated a little here: I chose to represent the pointers with one additional "wrap-around" bit so that the "empty" (cannot read) case can be represented by both pointers being equal, and the "full" (cannot write) case can be represented by both pointers being equal except for the wrap around bit, which we can then adapt for grey code with a little trickery (assuming an eight-entry buffer again, so three bits plus the additional wrap-around bit):

can_read = read_ptr_grey != write_ptr_grey

can_write = write_ptr_grey != { ~read_ptr_grey[4:3], read_ptr_grey[2:0] }

The above uses the Verilog notation where read_ptr_grey[4:3] means to take

bits three and four of read_ptr_grey, and ~ means a bitwise NOT. We end

up needing to compare invert bits rather than one in can_write because the

bit shift in the grey code function means that the wrap-around bit ends up

contributing to bits three and four.

Bringing it all into Verilog

This article is long enough as it is, so I'll now just show the full Verilog module I wrote to implement an async FIFO, with inline comments explaining the role that each part is playing. The real implementation uses a 256-entry buffer with a 16-bit value per entry, because that happens to correspond to exactly one of the dual-port block RAMs on the Lattice ICE40 FPGA I'm using for development.

// This is a generalization of the crossdomain module from earlier that // now supports a customizable value size, so we can safely transmit multi-bit // values as long as they are grey coded. module crossdomain #(parameter SIZE = 1) ( input reset, input clk, input [SIZE-1:0] data_in, output reg [SIZE-1:0] data_out ); reg [SIZE-1:0] data_tmp; always @(posedge clk) begin if (reset) begin {data_out, data_tmp} <= 0; end else begin {data_out, data_tmp} <= {data_tmp, data_in}; end end endmodule // Async FIFO implementation module asyncfifo( input reset, input write_clk, input write, input [DATA_WIDTH-1:0] write_data, output reg can_write, input read_clk, input read, output reg [DATA_WIDTH-1:0] read_data, output reg can_read ); parameter DATA_WIDTH = 16; parameter BUFFER_ADDR_WIDTH = 8; parameter BUFFER_SIZE = 2 ** BUFFER_ADDR_WIDTH; // Our buffer as a whole is accessed by both the write_clk and read_clk // domains, but read_clk is only used to access elements >= read_ptr and // write_clk only for elements < read_ptr. We're expecting this buffer to // be inferred as a dual-port block RAM, so the board-specific top module // should choose a suitable buffer size to allow that inference. reg [DATA_WIDTH-1:0] buffer[BUFFER_SIZE-1:0]; ///// WRITE CLOCK DOMAIN ///// // This is an address into the buffer array. // It intentionally has one additional bit so we can track wrap-around by // comparing with the MSB of read_ptr (or, at least, with the grey-code // form that we synchronize over into this clock domain.) reg [BUFFER_ADDR_WIDTH:0] write_ptr; wire [BUFFER_ADDR_WIDTH-1:0] write_addr = write_ptr[BUFFER_ADDR_WIDTH-1:0]; // truncated version without the wrap bit // This is the grey-coded version of write_ptr in the write clock domain. reg [BUFFER_ADDR_WIDTH:0] write_ptr_grey_w; // This is the grey-coded version of read_ptr in the write clock domain, // synchronized over here using module read_ptr_grey_sync declared later. wire [BUFFER_ADDR_WIDTH:0] read_ptr_grey_w; // Write pointer (and its grey-coded equivalent) increments whenever // "write" is set on a clock, as long as our buffer isn't full. wire [BUFFER_ADDR_WIDTH:0] next_write_ptr = write_ptr + 1; wire [BUFFER_ADDR_WIDTH:0] next_write_ptr_grey_w = (next_write_ptr >> 1) ^ next_write_ptr; // Our buffer is full if the read and write addresses are the same but the // MSBs (wrap bits) are different. We compare the grey code versions here // so we can use our cross-domain-synchronized copy of the read pointer. wire current_can_write = write_ptr_grey_w != { ~read_ptr_grey_w[BUFFER_ADDR_WIDTH:BUFFER_ADDR_WIDTH-1], read_ptr_grey_w[BUFFER_ADDR_WIDTH-2:0] }; wire next_can_write = next_write_ptr_grey_w != { ~read_ptr_grey_w[BUFFER_ADDR_WIDTH:BUFFER_ADDR_WIDTH-1], read_ptr_grey_w[BUFFER_ADDR_WIDTH-2:0] }; always @(posedge write_clk or posedge reset) begin if (reset) begin write_ptr <= 0; write_ptr_grey_w <= 0; can_write <= 1; end else begin if (write && can_write) begin write_ptr <= next_write_ptr; write_ptr_grey_w <= next_write_ptr_grey_w; can_write <= next_can_write; end else begin can_write <= current_can_write; end end end // If "write" is set on a clock then we commit write_data into the current // write address. always @(posedge write_clk) begin if (write && can_write) begin buffer[write_addr] <= write_data; end end ///// READ CLOCK DOMAIN ///// // This is an address into the buffer array. // It intentionally has one additional bit so we can track wrap-around by // comparing with the MSB of write_ptr (or, at least, with the grey-code // form that we synchronize over into this clock domain.) reg [BUFFER_ADDR_WIDTH:0] read_ptr; wire [BUFFER_ADDR_WIDTH-1:0] read_addr = read_ptr[BUFFER_ADDR_WIDTH-1:0]; // truncated version without the wrap bit // This is the grey-coded version of write_ptr in the read clock domain. reg [BUFFER_ADDR_WIDTH:0] read_ptr_grey_r; // This is the grey-coded version of write_ptr in the read clock domain, // synchronized over here using module write_ptr_grey_sync declared later. wire [BUFFER_ADDR_WIDTH:0] write_ptr_grey_r; // Read pointer (and its grey-coded equivalent) increments whenever // "read" is set on a clock, as long as our buffer isn't full. wire [BUFFER_ADDR_WIDTH:0] next_read_ptr = read_ptr + 1; wire [BUFFER_ADDR_WIDTH:0] next_read_ptr_grey_r = (next_read_ptr >> 1) ^ next_read_ptr; // Our buffer is empty if the read and write addresses are the same and the // MSBs (wrap bits) are also equal. We compare the grey code versions here // so we can use our cross-domain-synchronized copy of the write pointer. wire current_can_read = read_ptr_grey_r != write_ptr_grey_r; wire next_can_read = next_read_ptr_grey_r != write_ptr_grey_r; always @(posedge read_clk or posedge reset) begin if (reset) begin read_ptr <= 0; read_ptr_grey_r <= 0; read_data <= 0; can_read <= 0; end else begin if (read) begin if (can_read) begin read_ptr <= next_read_ptr; read_ptr_grey_r <= next_read_ptr_grey_r; end can_read <= next_can_read; if (next_can_read) begin read_data <= buffer[next_read_ptr]; end else begin read_data <= 0; end end else begin can_read = current_can_read; if (current_can_read) begin read_data <= buffer[read_addr]; end else begin read_data <= 0; end end end end ///// CROSS-DOMAIN ///// // Synchronize read_ptr_grey_r into read_ptr_grey_w. crossdomain #(.SIZE(BUFFER_ADDR_WIDTH+1)) read_ptr_grey_sync ( .reset(reset), .clk(write_clk), .data_in(read_ptr_grey_r), .data_out(read_ptr_grey_w) ); // Synchronize write_ptr_grey_w into write_ptr_grey_r. crossdomain #(.SIZE(BUFFER_ADDR_WIDTH+1)) write_ptr_grey_sync ( .reset(reset), .clk(read_clk), .data_in(write_ptr_grey_w), .data_out(write_ptr_grey_r) ); endmodule

Here's the signal output from a testbench I wrote for the asyncfifo module,

simulated with Icarus Verilog and visualized with GTKWave:

As expected, the can_read and can_write signals "lag behind" operations in

the opposite clock domain, but they do so in a safe, pessimistic way by

staying false longer than necessary. The slower clock of the receiver means

that the four values are consumed more slowly than they were tranmitted, and

(for testing purposes only) the sender intentionally transmits one additional

message even though can_write is false, showing that the invalid write is

safely discarded rather than corrupting the queue.

This is all just in simulation, of course, and simulation isn't subject to

metastability so we'll need to try this in real hardware before we'll know

for sure it's working. Next time, I'm hoping to write the producer component

to read data from dynamic RAM and write it into the buffer, which will allow

me to put this asyncfifo module into practice in an FPGA.